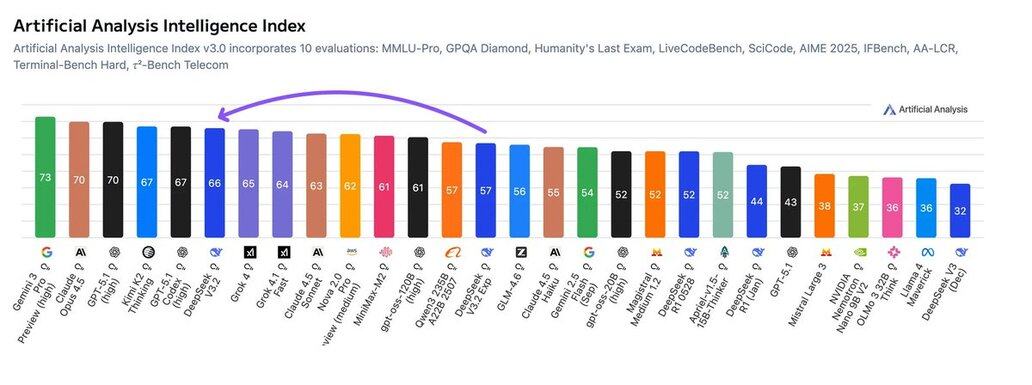

DeepSeek V3.2 is the #2 most intelligent open weights model and also ranks ahead of Grok 4 and Claude Sonnet 4.5 (Thinking) - it takes DeepSeek Sparse Attention out of ‘experimental’ status and couples it with a material boost to intelligence

@deepseek_ai V3.2 scores 66 on the Artificial Analysis Intelligence Index; a substantial intelligence uplift over DeepSeek V3.2-Exp (+9 points) released in September 2025. DeepSeek has switched its main API endpoint to V3.2, with no pricing change from the V3.2-Exp pricing - this puts pricing at just $0.28/$0.42 per 1M input/output tokens, with 90% off for cached input tokens.

Since the original DeepSeek V3 release ~11 moths ago in late December 2024, DeepSeek’s V3 architecture with 671B total/37B active parameters has seen them go from a model scoring a 32 to scoring a 66 in Artificial Analysis Intelligence Index.

DeepSeek has also released V3.2-Speciale, a reasoning-only variant with enhanced capabilities but significantly higher token usage. This is a common tradeoff in reasoning models, where more enhanced reasoning generally yields higher intelligence scores and more output tokens. V3.2-Speciale is available via DeepSeek's first-party API until December 15.

V3.2-Speciale currently scores lower on the Artificial Analysis Intelligence Index (59) than V3.2 (Reasoning, 66) because DeepSeek's first-party API does not yet support tool calling for this model. If V3.2-Speciale matched V3.2's tau2 score (91%) with tool calling enabled, it would score ~68 on the Intelligence Index, making it the most intelligent open-weights model. V3.2-Speciale uses 160M output tokens to run the Artificial Analysis Intelligence Index, nearly ~2x the number of tokens used by V3.2 in reasoning mode.

DeepSeek V3.2 uses an identical architecture to V3.2-Exp, which introduced DeepSeek Sparse Attention (DSA) to reduce the compute required for long context inference. Our Long Context Reasoning benchmark showed no cost to intelligence of the introduction of DSA. DeepSeek reflected this cost advantage of V3.2-Exp by cutting pricing on their first party API from $0.56/$1.68 to $0.28/$0.42 per 1M input/output tokens - a 50% and 75% reduction in pricing of input and output tokens respectively.

Key benchmarking takeaways:

➤🧠 DeepSeek V3.2: In reasoning mode, DeepSeek V3.2 scores 66 on the Artificial Analysis Intelligence Index and places equivalently to Kimi K2 Thinking (67) and ahead of Grok 4 (65), Grok 4.1 Fast (Reasoning, 64) and Claude Sonnet 4.5 (Thinking, 63). It demonstrates notable uplifts compared to V3.2-Exp (57) across tool use, long context reasoning and coding.

➤🧠 DeepSeek V3.2-Speciale: V3.2-Speciale scores higher than V3.2 (Reasoning) across 7 of the 10 benchmarks in our Intelligence Index. V3.2-Speciale now holds the highest and second highest scores amongst all models for AIME25 (97%) and LiveCodeBench (90%) respectively. However, as mentioned above, DeepSeek’s first-party API for V3.2-Speciale does not support tool calling and the model gets a score of 0 on the tau2 benchmark.

➤📚 Hallucination and Knowledge: DeepSeek V3.2-Speciale and V3.2 are the highest ranked open weights models on the Artificial Analysis Omniscience Index scoring -19 and -23 respectively. Proprietary models from Google, Anthropic, OpenAI and xAI typically lead this index.

➤⚡ Non-reasoning performance: In non-reasoning mode, DeepSeek V3.2 scores 52 on the Artificial Analysis Intelligence Index (+6 points vs. V3.2-Exp) and is the #3 most intelligent non-reasoning model. DeepSeek V3.2 (Non-reasoning) matches the intelligence of DeepSeek R1 0528, a frontier reasoning model from May 2025, highlighting the rapid intelligence gains achieved through pre-training and RL improvements this year.

➤⚙️ Token efficiency: In reasoning mode, DeepSeek V3.2 used more tokens than V3.2-Exp to run the Artificial Analysis Intelligence Index (from 62M to 86M). Token usage remains similar in non-reasoning variant. V3.2-Speciale demonstrates significantly higher token usage, using ~160M output tokens ahead of Kimi K2 Thinking (140M) and Grok 4 (120M)

➤💲Pricing: DeepSeek has not updated per token pricing for their first-party and all three variants are available at $0.28/$0.42 per 1M input/output tokens

Other model details:

➤ ©️ Licensing: DeepSeek V3.2 is available under the MIT License

➤ 🌐 Availability: DeepSeek V3.2 is available via DeepSeek API, which has replaced DeepSeek V3.2-Exp. Users can access DeepSeek V3.2-Speciale via a temporary DeepSeek API until December 15. Given the intelligence uplift in this release, we expect a number of third-party providers to serve this model soon.

➤ 📏 Size: DeepSeek V3.2 Exp has 671B total parameters and 37B active parameters. This is the same as all previous models in the DeepSeek V3 and R1 series

At DeepSeek's first-party API pricing of $0.28/$0.42 per 1M input/output tokens, V3.2 (Reasoning) sits on the Pareto frontier of the Intelligence vs. Cost to Run Artificial Analysis Intelligence Index chart

DeepSeek V3.2-Speciale is the highest ranked open weights model on the Artificial Analysis Omniscience Index while V3.2 (Reasoning) matches Kimi K2 Thinking

DeepSeek V3.2 is more verbose than its predecessor in reasoning mode, using more output tokens to run the Artificial Analysis Intelligence Index (86M vs. 62M).

Compare how DeepSeek V3.2 performs relative to models you are using or considering at:

8,003

143

本页面内容由第三方提供。除非另有说明,欧易不是所引用文章的作者,也不对此类材料主张任何版权。该内容仅供参考,并不代表欧易观点,不作为任何形式的认可,也不应被视为投资建议或购买或出售数字资产的招揽。在使用生成式人工智能提供摘要或其他信息的情况下,此类人工智能生成的内容可能不准确或不一致。请阅读链接文章,了解更多详情和信息。欧易不对第三方网站上的内容负责。包含稳定币、NFTs 等在内的数字资产涉及较高程度的风险,其价值可能会产生较大波动。请根据自身财务状况,仔细考虑交易或持有数字资产是否适合您。